Facebook: The Aspirational Government

Is it far-fetched to speculate that Facebook’s long term goal may entail becoming more than a mere place to sell ads to people, but actually a global powerhouse for mass manipulation of ideologies, perceptions and beliefs? Some might argue that it is already the case, but I want to further explore this thought.

First, the response Facebook has regarding the perceived negative impact of its actions (and inactions) makes me wonder to what extend the company cares for maximizing its profits, at least on the long term. The ongoing ad boycott is nothing but a minor dent in the company’s ever increasing revenue (more on that later).

Secondly, the company knows it has the world’s most sought after digital advertising channel, where small/medium are unable to avoid it to reach their audiences. This gives the company a phenomenally powerful dominant position.

Lastly, it’s worth mentioning that the level of control the CEO has, coupled with the lack of actual oversight over a company with such a global impact on our lives is astonishing. Mark Zuckerberg is the chairman of the board of directors and controls 60% of the voting shares1, giving him full control over the company’s future. His word is law. For most companies, a board of directors has actual voting power. If that was the case for Facebook, it could have produced quite different outcomes in the company’s past couple of years’ mishaps. But under a single ruler’s will, things are different.

And to me, that’s why it seems Facebook is able to take such a different stance when compared to other big tech companies.

Catering to Power

Facing continuous pressure from governments and institutions, Twitter was the first of the Big Tech companies that decided to ban political ads2 altogether from its platform. Google followed suit3 with a similar approach and restricted targeted ads at people based on their political leanings.

Facebook went ahead and did nothing of the sort.

Not only did the company said no restrictions would apply to political targeting, the company doubled down saying it wouldn’t do any sort of fact checking whatsoever4. In a speech at Georgetown University, Mark Zuckerberg said “People having the power to express themselves at scale is a new kind of force in the world[…]”, conveniently not highlighting that some have a greater opportunity to propagate their message, provided they pay for that privilege.

Facebook understands that maintaining its dominant position on the long term goes beyond maintaining its current revenue flow. Its aim is to avoid being subject to government scrutiny, potential fines but most importantly real regulation.

The best strategy lays in aligning and playing along with current political powers.

While Twitter finally stepped up and started fact-checking and labeling Donald Trump’s tweets, Facebook allowed for his inflammatory rhetoric to remain available and distributable on the platform.

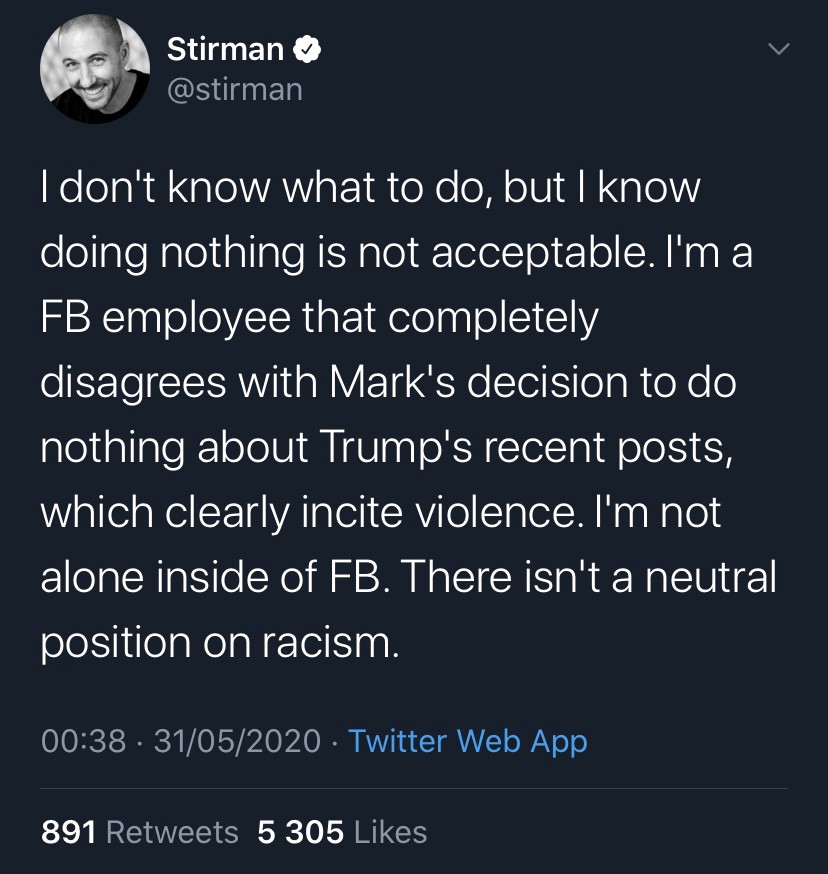

Jason Stirman, product R&D at Facebook.

So does Facebook doesn’t care at all about what employees, users and clients think of its actions? After all, Mark Zuckerberg did say privately that “[…]all these advertisers will be back on the platform soon enough.”5 To some degree he isn’t wrong: first, most companies participating in the boycott are doing it for only one-month; secondly, the highest-spending 100 brands only account for 6% of the company’s ad revenue6, meaning most mid and small sized companies who can’t afford to not spend in advertising will keep on doing so.

The same way the 2017 boycott against YouTube had no financial impact for the company on the long term, the same will be true for Facebook.

Tangent aside, it appears that Facebook seems to care at least for one specific individual. Following the internal outburst against Mark Zuckerberg’s decision to keep the post up, the CEO had a “productive” phone call with the U.S. president7. This political proximity is nothing new. In September 2019, Mark Zuckerberg met Donald Trump in the White House8 soon followed by a dinner in October9. The content of those meetings and calls? Undisclosed.

Facebook’s VP Andrew Bosworth said Donald Trump got elected in 2016 because “he ran the single best digital ad campaign I’ve ever seen from any advertiser”.10 That is if you put aside internal employee communications revealing that the company knew of Cambridge Analytica extracting and misusing their user data almost a year before the 2016 U.S. elections11; or ignore the fact that Facebook and Cambridge Analytica worked side-by-side for the 2016 Donald Trump campaign12.

Nonetheless, advertisers purchased more than $887m worth of political ads on Facebook since May 2018. Of that total, at least $21m were spent by Facebook’s №1 customer for political ads: the campaign to reelect President Donald Trump in 202013.

So is the motive for having such proximity to ensure future revenue from political ads don’t dry up? $1b revenue from political ads seems quite irrelevant to Facebook’s $69.5b global revenue in 201914. So maybe, the motive goes beyond money.

Blurring The Lines

IfI had to speculate, I’d say Facebook’s mission isn’t about connecting the world anymore but acting on that connection and influence it. As we look at its ever expanding reach while it “connects” the world, we assume its primary aim is to modify its users’ consumer behavior for classic advertising with maybe a sprinkle of politics in the middle.

But what if there were another behavior modification goal, geared towards more abstract concepts such as thought, opinions and beliefs? What if they actually instrumentalized their platform to nudge societies to shift their standards on ethics and moral? Or a nation’s political leaning? Or individuals’ acceptance or refusal of certain ideologies and tilting preference from democratic to authoritarian governments?

I’m not saying Facebook doesn’t already influence many aspects of our society, the difference being that until now it seems this influence was a by-product from its algorithms, network effect, the ability to get tricked into providing user data and general disregard for collaterals.

In fact, Facebook has never made a point in hiding their ambition of manipulating its users for its own goals.

In 2010, the company’s scientists claim to have boosted voter turnout in midterm elections by 340k through experimentation with a peer-pressure methods by displaying a “I Voted” sticker15.

In 2012, Facebook modified the newsfeed of 700k users to display more positive or negative content, demonstrably modifying their mood by observing a change in their comment patterns which became more positive or negative during the experiment16.

Now in 2020, the company plans on increasing voter turnout for the U.S. election through a “Voting Information Center” that will “reduce the effectiveness of malicious networks that might try to take advantage of uncertainty and interfere with the election by getting clear, accurate and authoritative information to people”17. In a historically opaque company that excuses itself with platitudes, what guarantees do we have that this voter turnout is fair? How will we ever know that the algorithms employed to boost turnouts won’t be geared at benefitting one party over another? And how would we know the actual reason behind an eventual manipulation? Could it be done as a favor for a government in return for less scrutiny from regulators? Who would know?

Governing: The Ideal Segue?

Facebook certainly has an ambivalent stance when it comes to helping its users, considering the rampant misinformation it allows to circulate. It knowingly exacerbates the polarization of its user base18, leading to an overall lower quality of life for those subject to these attacks on the mind.

But I’m less worried about incompetent behavior resulting in negative social consequences than I am about the potential marriage of technocrats with governmental power: so far tech companies have mainly encountered push back and been held accountable by either the market, organized workers or governments.

Even though a market boycott is deemed a plausible threat, as we’ve seen so far it has posed no issue to the company’s revenue as advertisers will inevitably want to tap into Facebook’s billions of eyeballs.

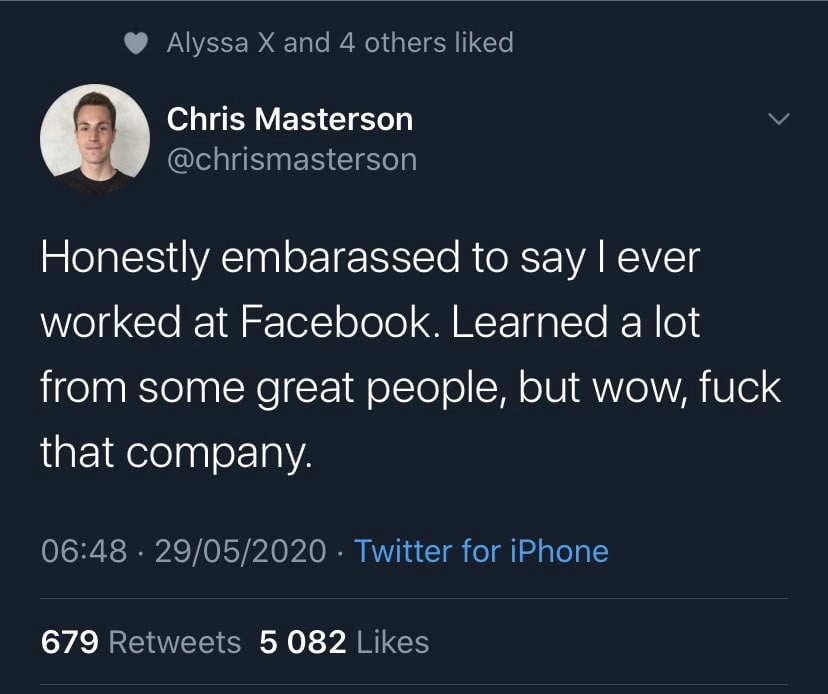

Chris Masterson, ex Product Designer at Facebook/Instagram.

Tech workers concerned with the course of the company have a crucial role at leaking how the ever increasing secretive company handles things internally, though companies are increasingly cracking down on dissent. Facebook fired an employee19 who protested the company’s inaction towards Trump’s inflammatory comments in reference to demonstrations against the killing of George Floyd.

I still believe tech workers are the best bet for bringing change to a company but, so far, it hasn’t been the case for Facebook.

Ultimately, governments seem to be the only entities powerful enough to be capable of intervening and, through regulation, threats of fines or dismantling, force a behavior change. But what chances do we have of seeing that happen when there are year-long efforts of influencing state prosecutors20? Or with Facebook’s attempt at shaping privacy laws by trying suggesting that regulators work side-by-side with the industry to be “helped” at defining these laws21.

Lobbying governments has always been part of the game but Facebook seems to be more keen than others in cultivating a closer relationship with those in power, specially the current administration22.

Is it far-fetched to imagine a possible wish for power consolidation between corporations and the state and wonder what that could mean? After all, there are enough examples of companies that benefited from collaborating with governments and vice-versa23.

Could it simply be a move to cater to the platforms’ increasing right-leaning user-base? Or is it precisely because of that specific demographic that Facebook is changing its approach to the American right and eventually embracing it? Corporations can already seem nation-like, with Facebook boasting on a third of the planet’s population. What if it decided to act as a rogue nation?

Could Facebook want more than simply connecting the world? What would be the result of evolving from an entity that facilitates discourse, to one that steers political discourse until eventually becoming itself part of politics?

Sources

1Zuckerberg’s control of Facebook is near absolute — who will hold him accountable?

The Guardian, 2018

2Twitter bans political ads after Facebook refused to do so

CNBC, 2019

3Google to restrict political adverts worldwide

BBC, 2019

4Facebook Says It Won’t Back Down From Allowing Lies in Political Ads

NYT, 2020

5Zuckerberg Tells Facebook Staff He Expects Advertisers to Return ‘Soon Enough’

The Information, 2020

6The hard truth about the Facebook ad boycott: Nothing matters but Zuckerberg

CNN, 2020

7Trump and Zuckerberg share phone call amid social media furor

Axios, 2020

8Facebook’s Zuckerberg met with President Trump at the White House

CNBC, 2019

9Trump hosted Zuckerberg for undisclosed dinner at the White House in October

NBC, 2019

10Top Facebook exec says Trump ran the best digital ad campaign ever in 2016

CNBC, 2020

11Facebook learned about Cambridge Analytica as early as September 2015, new documents show

CNBC 2019

12Facebook and Cambridge Analytica worked side by side at a Trump campaign office in San Antonio

Quartz, 2018

13From Trump to Planned Parenthood, these are the Facebook pages spending the most money on political ads

Business Insider, 2019

14Facebook’s advertising revenue worldwide from 2009 to 2019

Statista, 2020

15Facebook’s I Voted sticker was a secret experiment on its users

Vox, 2014

16Facebook manipulated users’ moods in secret experiment

Independent, 2014

17Facebook plans voter turnout push — but will not bar false claims from Trump

The Guardian, 2020

18Bombshell report: Facebook has known that it is fomenting extremism for years — and refuses to stop

Media Matters for America, 2020

19Facebook fires employee who protested inaction on Trump posts

Reuters, 2020

20Inside Facebook’s Years-Long Effort to Influence State Prosecutors

Tech Transparency Project, 2020

21Facebook’s plan for privacy laws? ‘Co-creating’ them with Congress

Protocol, 2020

22Zuckerberg once wanted to sanction Trump. Then Facebook wrote rules that accommodated him

Washington Post, 2020

23IBM and the Holocaust: The Strategic Alliance between Nazi Germany and America’s Most Powerful Corporation

Edwin Black, 2001

24Facebook is out of control. If it were a country it would be North Korea

The Guardian, 2020